Scrapy Tutorial 1: overview

About Scrapy

Scrapy is a free and open source web crawling framework , written in Python. Originally designed for web scraping, it can also be used to extract data using API. or as a general purpose web crawler. It is currently maintained by Scrapinghub Ltd. , a web scraping development and services company.

Architecture Overview

Data Flow

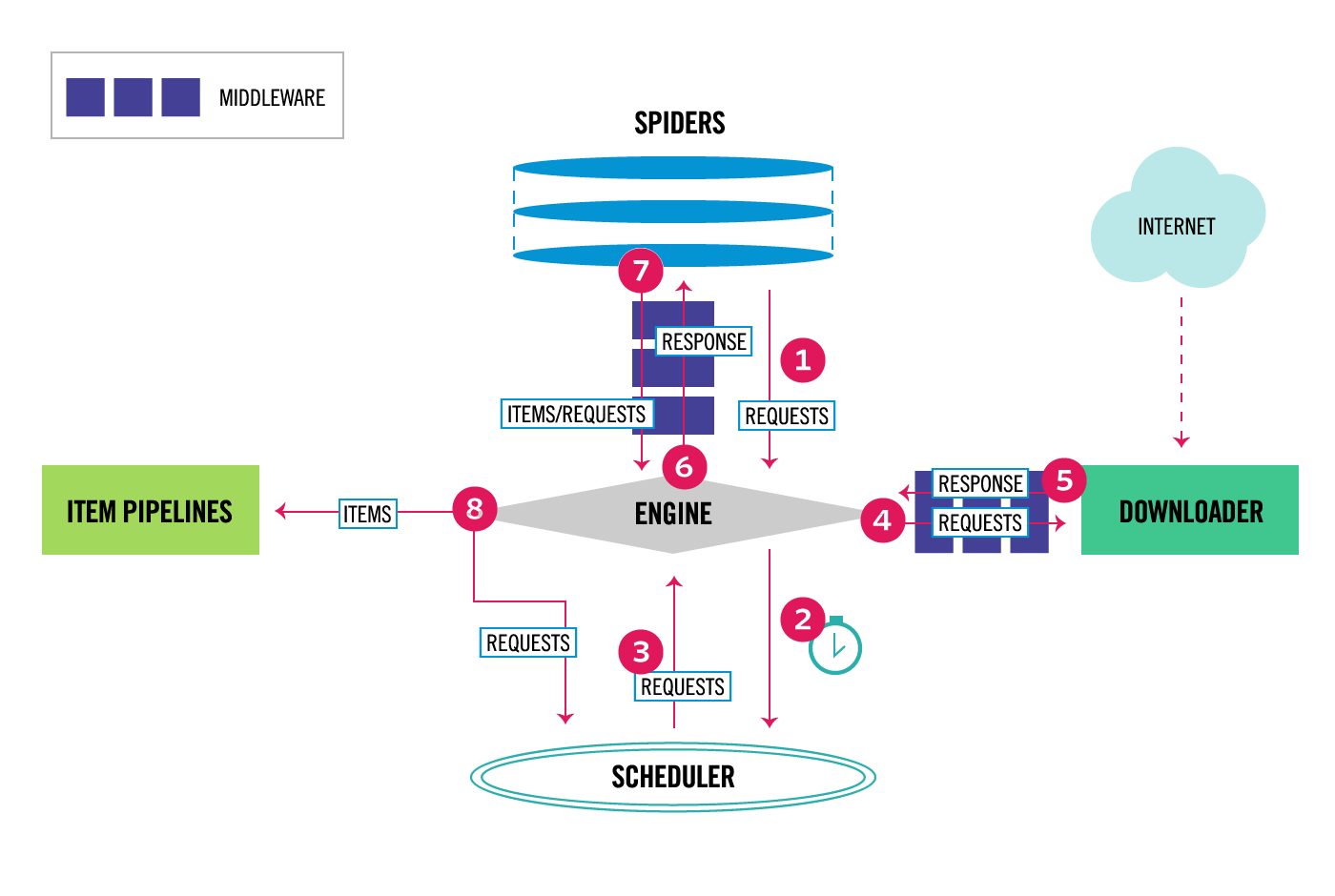

The following diagram shows an overview of the Scrapy architecture with its components and and outline of data flow (red arrows). architecture

architecture

The data flow is controlled by the execution engine and goes like this (as indicated by the red arrow):

- The Engine gets the initial Requests to crawl from the Spiders.

- The Engine schedules the Requests in the Scheduler and ask for the next Requests to crawl.

- The Scheduler sends back the next Requests to the Engine.

- The Engine send the Requests to the Downloader through the Downloader Middlewares (see process_request()).

- Once the Downloader finishes the downloading it generates a Response and sends it back to Engine through the Downloader Middlewares (see process_response()).

- The Engine sends the received Response to the Spiders for processing through the Spider Middleware (see process_spider_input()).

- The Spiders processes the Response and returns the scraped Items and new Requests (to follow) to the Engine through the Spider Middleware (see process_spider_output()).

- The Engine sends the scraped Items to Item Pipelines, then send the processed Requests to the Scheduler and ask for the next possible Requests to crawl.

- The process repeats (from step 1) until there are no more requests from the Spiders.

Components

Scrapy Engine

The engine controls the data flow between all components and triggers events when certain action occurs. See Data Flow for more details.

Scheduler

The Scheduler receives the request from the engine and enqueues them for feeding them back to engine later when requested.

Downloader

The Downloader is responsible for fetching web pages from the Internet and feeding them back to the engine.

Spiders

Spiders are custom classes written by the user to parse responses and extract scraped items from them or additional requests to follow. Each spider is used for one (or a series of) specific webpage.

Item Pipelines

The Item Pipelines is responsible for processing the extracted items from the spiders. Typical tasks include cleansing, validation and persistence (like stoing the item in a database)

Downloader Middleware

Downloader Middleware is a specific hook between the Engine the the Downloader and processes requests when pass from the Engine to the Downloader and responses that pass from Downloader to the Engine. It provides a simple mechanism to extend Scrapy by inserting user defined code, like automatic replace user-agent, IP, etc.

Spider Middleware

Spider Middleware is a specific hook between the Engine and the Spider and processes spider input (response) and output (items and request). It also provides a simple mechanism to extend Scrapy functions by using user-defined code.

Process to Create a Scrapy Project

Create Project

First you need to create a Scrapy project. I’ll use the England Premier League website as an example. Run the following command:

scrapy startproject EPLspider

The EPLspider directory with the following content will be created:

EPLspider/

├── EPLspider

│ ├── __init__.py

│ ├── __pycache__

│ ├── items.py

│ ├── middlewares.py

│ ├── pipelines.py

│ ├── settings.py

│ └── spiders

│ ├── __init__.py

│ └── __pycache__

└── scrapy.cfg

The content of each file:

- EPLspider/: Python module of the project, in which code will be added.

- EPLspider/items.py: item file of the project.

- EPLspider/middlewares.py: middlewares file of the project.

- EPLspider/pipelines: pipelines file of the project.

- EPLspider/settings: settings file of the project.

- EPLspider/spiders/: directory with spider code.

- scrapy.cfg: configuration file of the Project.

Start with the First Spider

Spiders are classes that you define and that Scrapy uses to scrape information from a website (or a group of websites). They must subclass scrapy.Spider and define the initial request to make, optionally how to deal with links in the pages, and how to parse the downloaded page content to extract data.

This is our first Spider, EPL_spider.py, saved in the directory EPLspider/spiders/.

from scrapy.spiders import Spider

class EPLspider(Spider):

name = 'premierLeague'

start_urls = ['https://www.premierleague.com/clubs']

def parse(self, response):

club_url_list = response.css('ul[class="block-list-5 block-list-3-m block-list-2-s block-list-2-xs block-list-padding dataContainer"] ::attr(href)').extract()

club_name = response.css('h4[class="clubName"]::text').extract()

club_stadium = response.css('div[class="stadiumName"]::text').extract()

for i,j in zip(club_name, club_stadium):

print(i, j)

Run the Spider

Run the following command in the project folder:

scrapy crawl premierLeague

The club name and stadium of all clubs from the England Premier League will be printed out.

Summary

In this tutorial we show the overall architecture of Scrapy and show its basics with a demo. In the next tutorial, we’ll extend this simple spider program to get more detailed information about the England Premier League, i.e. clubs, players, managers, and match information, etc.